· Articles · 4 min read

Remote MCP

Launch of Remote MCP

Model Context Protocol (MCP): A Brief Overview

The Model Context Protocol (MCP) is an open standard developed by Anthropic to streamline the integration of AI assistants with external data sources, tools, and systems. Its primary goal is to provide AI models with real-time, relevant, and structured information while maintaining security, privacy, and modularity.

Core Concepts

Standardized Integration: MCP offers a universal protocol for connecting AI systems with various data sources, replacing fragmented integrations with a single, cohesive standard.

Client-Server Architecture: Developers can expose their data through MCP servers or build AI applications (MCP clients) that connect to these servers, facilitating secure, two-way communication.

Live Knowledge Access: By connecting to real-time graphs of both private (internal repositories, documents) and public (web data, APIs) sources, MCP ensures AI assistants have access to up-to-date information.

Mentions System: Users can guide AI attention using mentions (e.g.,

@auth_service.py,@frontend-repo), allowing the AI to focus on specific files, repositories, or systems.Security and Compliance: MCP enforces access controls, data governance, and security policies automatically, ensuring sensitive or restricted information is properly handled.

Adoption

MCP has been adopted by major AI providers, including OpenAI and Google DeepMind, and is supported by various tools and platforms. Its open-source nature and standardized approach have made it a rapidly emerging standard for connecting AI models to data sources.

In summary, MCP acts as a universal adapter between AI applications and external tools or data sources, simplifying the integration process and enhancing the capabilities of AI assistants across various domains.

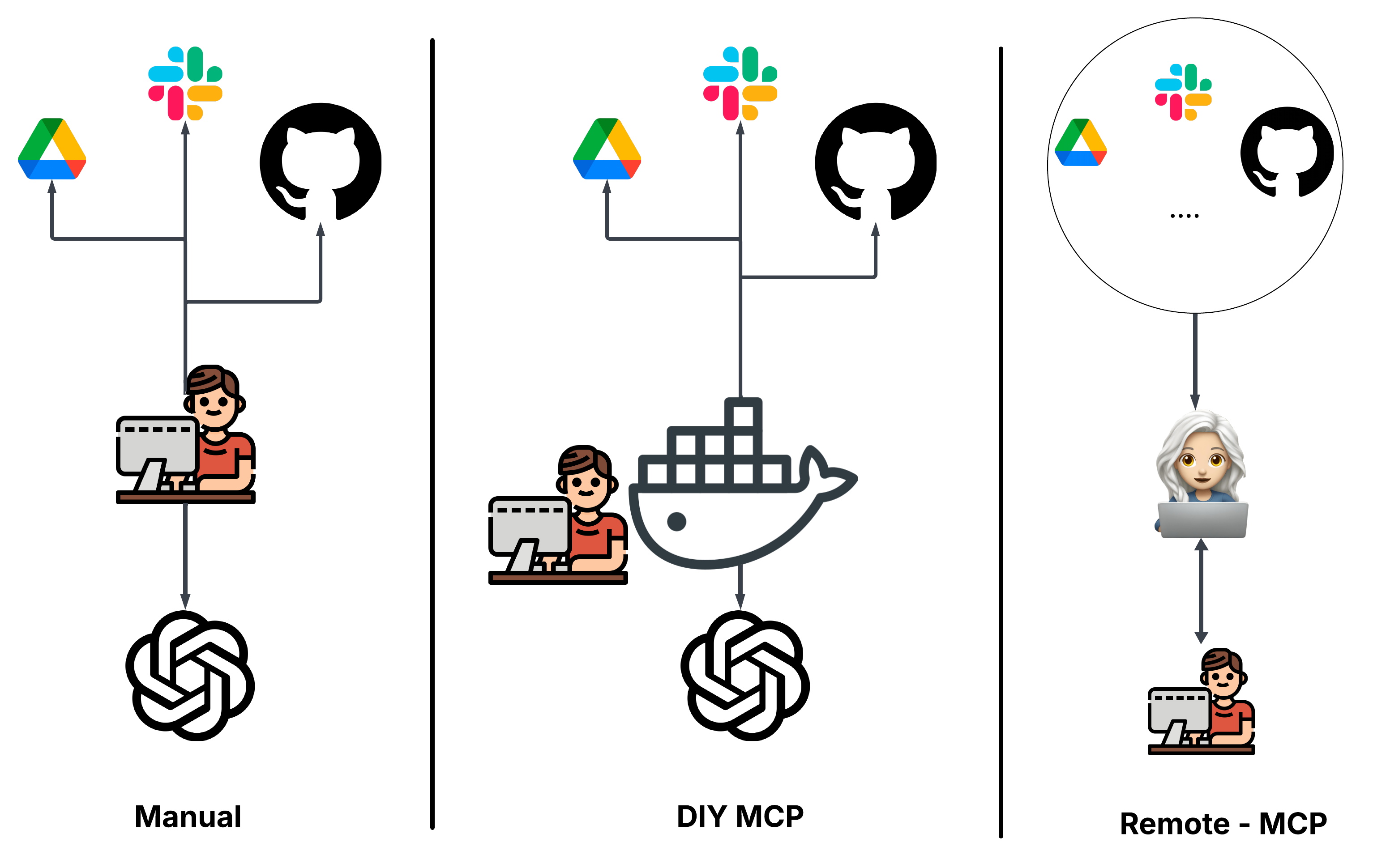

MCP Requires a DIY Approach with Docker Containers

One major operational hurdle when using the Model Context Protocol (MCP) today is that it heavily relies on a DIY (Do It Yourself) setup, especially involving Docker containers.

Why Docker Is Required

- MCP servers are typically exposed as local HTTP endpoints that the AI model connects to.

- To run these servers securely and reliably, organizations usually containerize the MCP servers using Docker.

- Each external tool or internal system integration (e.g., GitHub, Jira, databases) often requires spinning up separate Dockerized MCP service containers.

Challenges This Creates

- Self-Hosting Burden: You need to manage hosting, networking, health checks, and uptime of these containers manually.

- DevOps Complexity: Setting up MCP servers across different environments (local development, staging, production) demands solid DevOps practices (container orchestration, monitoring, logging).

- Scaling Issues: As the number of integrations grows, so does the overhead of maintaining multiple containers — each requiring version updates, security patches, and resource management.

- Security Risks: Poorly configured Docker containers (e.g., open ports, missing authentication) can expose sensitive data or systems to vulnerabilities.

Summary

✅ Flexibility: You control exactly which tools, APIs, and data are exposed to your AI assistant. ❌ High Setup Cost: Without managed services, setting up a robust MCP environment today means building and maintaining your own containerized infrastructure.

In short: Adopting MCP in its current form assumes a DIY model with Docker at the core, requiring technical expertise and operational discipline to use safely and effectively.

How Sophi Runs MCP Servers Remotely to Improve Knowledge Access

Sophi solves many of the traditional pain points of MCP by running all MCP servers remotely in the cloud, rather than forcing users to DIY with local Docker containers.

Key Improvements Sophi Brings:

1. Fully Managed MCP Servers in the Cloud

- Instead of asking each user to spin up and maintain their own containers, Sophi hosts scalable, secure MCP servers on dedicated cloud infrastructure.

- These servers are pre-integrated with key systems (code repositories, databases, APIs) and continuously updated.

▶️ Result: Users get seamless access to tools and knowledge without worrying about hosting, scaling, or securing containers.

2. Always Up-to-Date Knowledge Base

- Sophi syncs live with both private company data (internal repos, documents, APIs) and public sources (framework updates, open-source libraries, vendor documentation).

- Cloud syncing ensures that changes are reflected instantly without manual refreshes.

▶️ Result: Every query is answered with the latest available information, improving reliability and trust.

3. Reduced Latency

- By centralizing MCP servers in optimized cloud regions and using intelligent caching, Sophi dramatically reduces round-trip times for queries.

- This setup avoids the slow, error-prone performance issues often seen with locally-run Docker containers.

▶️ Result: Faster response times — AI feels as fast and fluid as working locally, even when querying complex knowledge graphs.

4. Improved Search Relevancy

- Sophi doesn’t just fetch information — it intelligently re-ranks and filters search results based on user context (project, team, system mentions).

- The cloud backends constantly optimize search algorithms using real-time feedback and semantic understanding.

▶️ Result: Users see the most contextually relevant answers first, with minimal noise.

Why This Matters

| Challenge | Traditional MCP (Local DIY) | Sophi Cloud MCP |

|---|---|---|

| Setup Complexity | Manual Docker deployment | Zero-setup, fully managed |

| Data Freshness | Requires manual syncs | Live, auto-synced |

| Latency | High, especially on local machines | Low, globally optimized |

| Search Quality | Basic retrieval | Context-aware, ranked results |

| Maintenance | Self-managed updates & security | Fully automated |

✅ In short: Sophi turns MCP from a “developer burden” into a “plug-and-play superpower” — providing live, trusted, high-speed knowledge access without operational headaches.

- knowledge agent

- productivity

- AI

- Sophi

- engineering

Sophi

Sophi